High Performance

Database Consulting

- Build, repair and optimize complex databases

- Work directly with a top-tier database expert

Data Migration / ETL

Transforming database structures or moving from one database to another is a complex endeavor. You want to make sure that the target tables are well-designed and robust enough to handle future needs. Taking time up front to ensure proper design is far better than trying to compensate for bad design for years to come.

Porting data from the original tables to the new tables requires careful planning. If the structures are significantly different, the SQL ETL code can get somewhat intricate. For example, sometimes we will rework a wide table that has a large number of columns, most of which are rarely used, into a thin table that has one column for the old column name, and another for the value. This will require multiple passes through the old table.

Oftentimes the existing data has various problems that have to be dealt with prior to the migration. The most common is duplicate data, where information that should be in a single record is instead in multiple records, each with different (and sometimes conflicting) pieces of data. Perhaps a customer was entered multiple times by multiple operators, each time with a slighly different spelling of their name.

Database migration is the best time to eliminate these types of errors as best as possible, and oftentimes dealing with bad data takes more time than the actual porting process. Customers need to plan for this up front, and a process for finding all the bad data must be implemented before any ETL happens.

ETL (Extract-Transform-Load) is just a special type of SQL code used for porting and migration. It is typically done in bulk, one time only, so that performance is not the chief concern, but rather accuracy. If real-time ETL is needed to constantly add new data from some sort of pipeline, then performance becomes more important, to avoid locking problems and maintain responsiveness.

In either case, if you need assistance moving or transforming your database, please get in touch. I’d love to help out!

VIBRANT EMOTIONAL HEALTH

(RUNS THE NATIONAL SUICIDE PREVENTION HOTLINE)

NEW YORK, NY

Work Samples

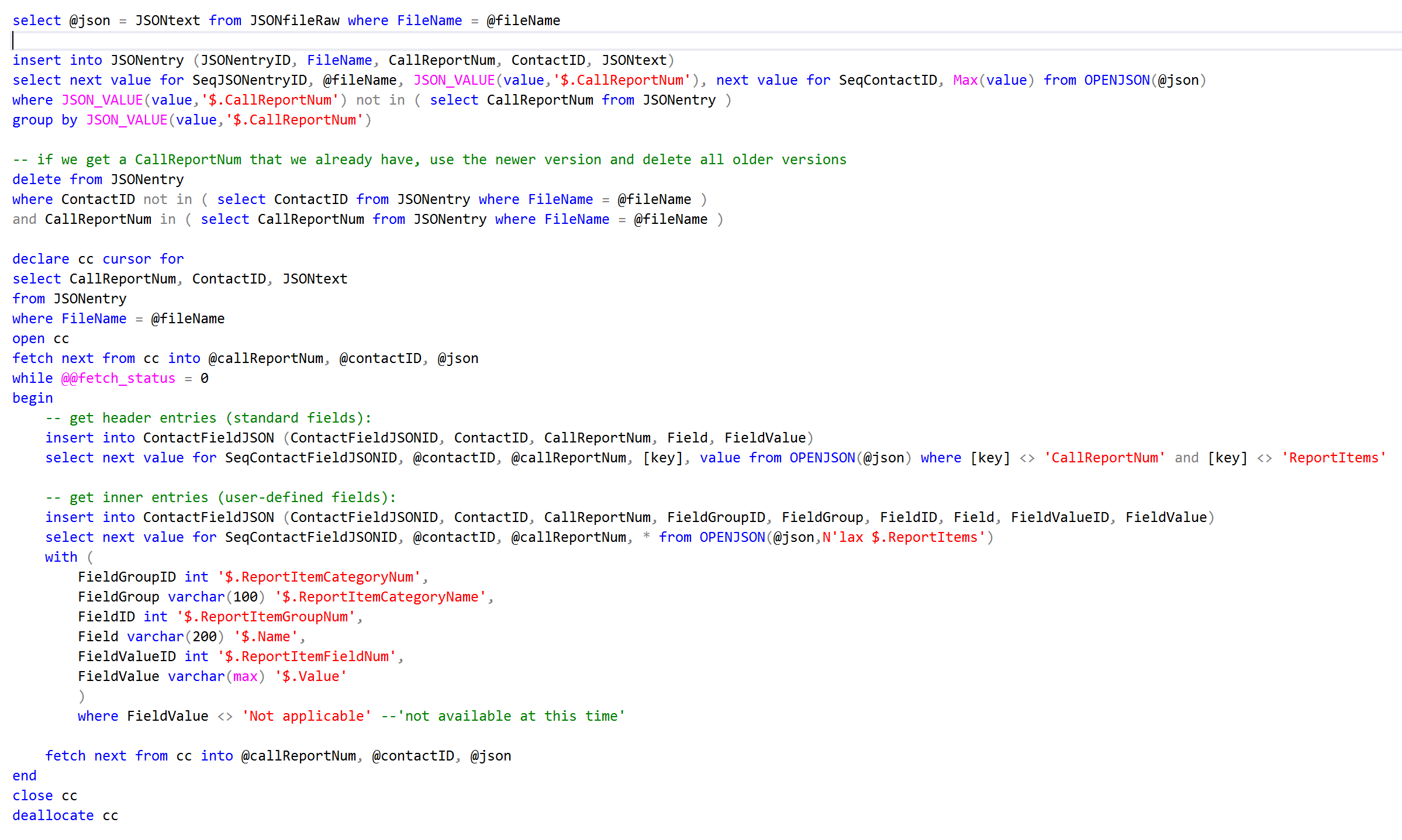

Importing JSON files

A snippet of code (part of a stored procedure) that imports structured JSON text files into SQL Server tables. This work was done for the National Suicide Hotline to help them build out a data warehouse with real-time analysis. New data was imported every few minutes using AWS Lambda.

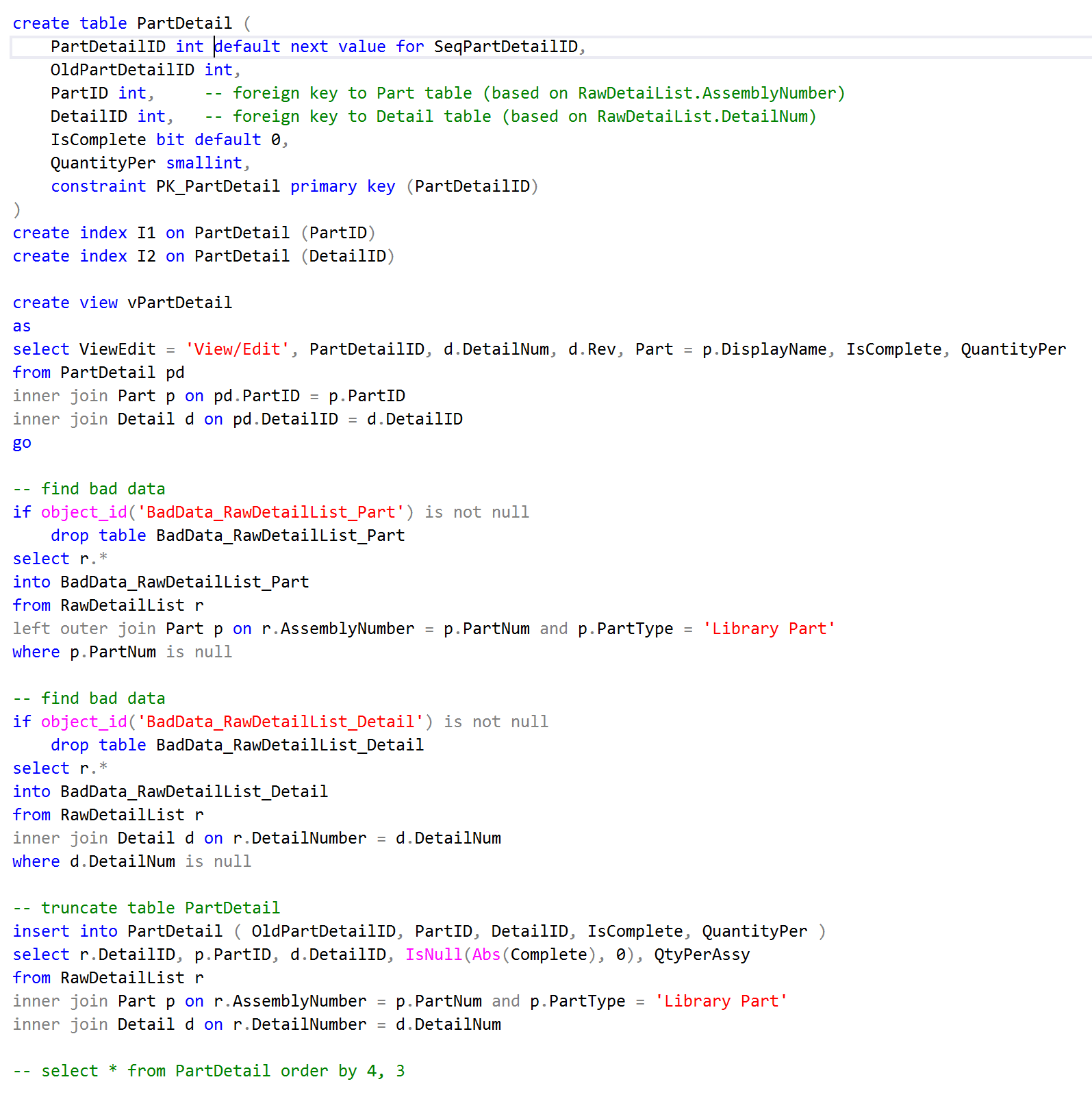

Migration Code: Microsoft Access to SQL Server

A snippet of code combining DDL for a Parts Detail table with ETL code that first finds and isolates bad data for later hand review, and then does the actual migration from a “raw” table (imported from Access) into the new table in SQL Server. This work was done for a Midwest manufacturing firm.